In this post, I’d like to cover some of the new Docker network features. Docker 1.9 saw the release of user defined networks and the most recent version 1.10 added some additional features. In this post, we’ll cover the basics of the new network model as well as show some examples of what these new features provide.

In this post, I’d like to cover some of the new Docker network features. Docker 1.9 saw the release of user defined networks and the most recent version 1.10 added some additional features. In this post, we’ll cover the basics of the new network model as well as show some examples of what these new features provide.

So what’s new? Well – lots. To start with, let’s take a look at a Docker host running the newest version of Docker (1.10).

Note: I’m running this demo on CentOS 7 boxes. The default repository had version 1.8 so I had to update to the latest by using the update method shown in a previous post here. Before you continue, verify that ‘docker version’ shows you on the correct release.

You’ll notice that the Docker CLI now provide a new option to interact with the network through the ‘docker network’ command…

Alright – so let’s start with the basics and see what’s already defined…

By default a base Docker installation has these three networks defined. The networks are permanent and can not be modified. Taking a closer look, you likely notice that these predefined networks are the same as the network models we had in earlier versions of Docker. You could start a container in bridge mode by default, host mode my specifying ‘–net=host’, and without an interface by specifying ‘–net=none’. To level set – everything that was there before is still here. To make sure everything still works as expected, let’s run through building a container under each network type.

Note: These exact commands were taken out my earlier series of Docker networking 101 posts to show that the command syntax has not changed with the addition of multi-host networking. Those posts can be found here.

Host mode

docker run -d --name web1 --net=host jonlangemak/docker:webinstance1

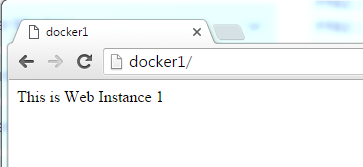

Executing the above command will spin up a test web server with the containers network stack being mapped directly to that of the host. Once the container is running, we should be able to access the web server through the Docker hosts IP address…

Note: You either need to disable firewalld on the Docker host or add the appropriate rules for this to work.

docker run -d --name web1 -p 8081:80 jonlangemak/docker:webinstance1 docker run -d --name web2 -p 8082:80 jonlangemak/docker:webinstance2

Here we’re running the default bridge mode and mapping ports into the container. Running those two containers should give you the web server you’re looking for on ports 8081 and 8082…

In addition, if we connect to the containers directly, we can see that communication between the two containers occurs directly across the docker0 bridge never leaving the host…

Here we can see that web1 has an APR entry for web2. Looking at Web2, we can see the MAC address is identical…

docker run -d --name web1 --net=none jonlangemak/docker:webinstance1

In this example we can see that the container doesn’t receive any interface at all…

As you can see, all three modes work just as they had in previous versions of Docker. So now that we’ve covered the existing network functions, lets’ talk about the new user defined networks…

User defined bridge networks

The easiest user defined network to use is the bridge. Defining a new bridge is pretty easy. Here’s a quick example…

docker network create --driver=bridge \ --subnet=192.168.127.0/24 --gateway=192.168.127.1 \ --ip-range=192.168.127.128/25 testbridge

Here I create a new bridge named ‘testbridge’ and provide the following attributes…

Gateway – In this case I set it to 192.168.127.1 which will be the IP of the bridge created on the docker host. We can see this by looking at the Docker hosts interfaces…

Subnet – I specified this as 192.168.127.0/24. We can see in the output above that this is the CIDR associated with the bridge.

IP-range – If you wish to define a smaller subnet from which Docker can allocate container IPs from your can use this flag. The subnet you specify here must exist within the bridge subnet itself. In my case, I specified the second half of the defined subnet. When I start a container, I’ll get an IP out of the subnet if I assign the container to this bridge…

Our new bridge acts much like the docker0 bridge. Ports can be mapped to the physical host in the same manner. In the above example, we mapped port 8081 to port 80 in the container. Despite this container being on a different bridge, the connectivity works all the same…

We can make this example slightly more interesting by removing the existing container, removing the ‘testbridge’, and redefining it slightly differently…

docker stop web1 docker rm web1 docker network rm testbridge docker network create --driver=bridge \ --subnet=192.168.127.0/24 --gateway=192.168.127.1 \ --ip-range=192.168.127.128/25 –-internal testbridge

The only change here is the addition of the ‘—internal’ flag. This prevents any external communication from the bridge. Let’s check this out by defining the container like this…

docker run -d --net=testbridge -p 8081:80 --name web1 jonlangemak/docker:webinstance1

You’ll note that in this case, we can no longer access the web server container through the exposed port…

It’s obvious that the ‘—internal’ flag prevents containers attached to the bridge from talking outside of the host. So while we can now define new bridges and associate newly spawned containers to them, that by itself is not terribly interesting. What would be more interesting is the ability to connect existing containers to these new bridges. As luck would have it, we can use the docker network ‘connect’ and ‘disconnect’ commands to add and remove containers from any defined bridge. Let’s start by attaching the container web1 to the default docker0 bridge (bridge)…

docker network connect bridge web1

If we look at the network configuration of the container, we can see that it now has two NICs. One associated with ‘bridge’ (the docker0 bridge), and another associated with ‘testbridge’…

If we check again, we’ll see that we can now once again access the web server through the mapped port across the ‘bridge’ interface…

Next, let’s spin up our web2 container, and attach it to our default docker0 bridge…

docker run -d -p 8082:80 --name web2 jonlangemak/docker:webinstance2

Before we get too far – let’s take a logical look at where we stand…

We have a physical host (docker1) with a NIC called ‘ENS0’ which sits on the physical network with the IP address of 10.20.30.230. That host has 2 Linux bridges called ‘bridge’ (docker0) and ‘testbridge’ each with their own defined IP addresses. We also have two containers, one called web1 which is associated with both bridges and a second, web2, that’s associated with only the native Docker bridge.

Given this diagram, you might assume that web1 and web2 would be able to communicate directly with each other since they are connected to the same bridge. However, if you recall our earlier posts, Docker has something called ICC (Inter Container Communication) mode. When ICC is set to to false, containers can’t communicate with each other directly across the docker0 bridge.

Note: There’s a whole section on ICC and linking down below so if you don’t recall don’t worry!

In the case of this example, I have set ICC mode to false meaning that web1 can not talk to web2 across the docker0 bridge unless we define a link. However, ICC mode only applies to the default bridge (docker0). If we connect both containers to the bridge ‘testbridge’ they should be able to communicate directly across that bridge. Let’s give it a try…

docker network connect testbridge web2

So let’s try from the container and see what happens…

Success. User defined bridges are pretty easy to define and map containers to. Before we move on to user defined overlays, I want to briefly talk about linking and how it’s changed with the introduction of user defined networks.

Container Linking

Docker linking has been around since the early versions and was commonly mistaken for some kind of network feature of function. In reality, it has very little to do with network policy, particularly in Docker’s default configuration. Let’s take a quick look at how linking worked before user defined networks.

In a default configuration, Docker has the ICC value set to true. In this mode, all containers on the docker0 bridge can talk directly to each on any port they like. We saw this in action earlier with the bridge mode example where web1 and web2 were able to ping each other. If we change the default configuration, and disable ICC, we’ll see a different result. For instance, if we change the ICC value to ‘false’ in ‘/etc/sysconfig/docker’, we’ll notice that the above example no longer works…

If we want web1 to be able to access web2 we can ‘link’ the containers. Linking a container to another container allows the containers to talk to each other on the containers exposed ports.

Above, you can see that once the link is in place, I can’t ping web1 from web2, but I can access web1 on it’s exposed port. In this case, that port is 80. So linking with ICC disabled only allows linked containers to talk to each other on their exposed ports. This is the only way in which linking interests with network or security policy. The other feature linking gives you is name and service resolution. For instance, let’s look at the environmental variables on web2 once we link it to web1…

In addition to the environmental variables, you’ll also notice that web2’s hosts file has been updated to include the IP address of the web1 container. This means that I can now access the container by name rather than by IP address. As you can see, linking in previous versions of Docker had its uses and that same functionality is still available today.

That being said, user defined networks offer a pretty slick alternative to linking. So let’s go back to our example above where web1 and web2 are communicating across the ‘testbridge’ bridge. At this point, we haven’t defined any links at all but lets’ trying pinging web2 by name from web1…

Ok – so that’s pretty cool, but how is that working? Let’s check the environmental variables and the hosts file on the container…

Nothing at all here that would statically map the name web2 to an IP address. So how is this working? Docker now has an embedded DNS server. Container names are now registered with the Docker daemon and resolvable by any other containers on the same host. However – this functionality ONLY exists on user defined networks. You’ll note that the above ping returned an IP address associated with ‘testbridge’, not the default docker0 bridge.

That means I no longer need to statically link containers together in order for them to be able to communicate via name. In addition to this automatic behavior, you can also define global aliases and links on the user defined networks. For example, now try running these two commands…

docker network disconnect testbridge web1 docker network connect --alias=thebestserver --link=web2:webtwo testbridge web1

Above we removed web1 from ‘testbridge’ and then re-added it specifying a link and an alias. When using the link flag with user defined networks, it functions much in the same way as it did in the legacy linking method. Web1 will be able to resolve the container web2 either by it’s name, or it’s linked alias ‘webtwo’. In addition, user defined networks also provide what are referred to as ‘network-scoped aliases’. These aliases can be resolved by any container on the user defined network segment. So whereas links are defined by the container that wishes to use the link, aliases are defined by the container advertising the alias. Let’s log into each container and try pinging via the link and the alias…

In the case of web1, it’s able to ping both the defined link as well as the alias name. Let’s try web2…

So we can see that links used with user defined network are locally significant to the container. On the other hand, aliases are associated to a container when it joins a network, and are globally resolvable by any container on that same user defined network.

User defined overlay networks

The second and last built-in user defined network type is the overlay. Unlink the bridge type, the overlay requires an external key value store in order to store information such as the networks, endpoints, IP address, and discovery information. In most examples, that key value store is generally Consul but it can also be Etcd or ZooKeeper. So lets look at the lab we’re going to be using for this example…

Here we have 3 Docker hosts. Docker1 and docker2 live on 10.20.30.0/24 and docker3 lives on 192.168.30.0/24. Starting with a blank slate, all of the hosts have the docker0 bridge defined but no other user defined network or containers running.

The first thing we need to do is to tell all of the Docker hosts where to find the key value store. This is done by editing the Docker configuration settings in ‘/etc/sysconfig/docker’ and adding some option flags. In my case, my ‘OPTIONS’ now look like this…

OPTIONS='-H tcp://0.0.0.0:2375 -H unix:///var/run/docker.sock --cluster-store=consul://10.20.30.230:8500/network --cluster-advertise=ens18:2375'

Make sure that you adjust your options to account for the IP address of the Docker host running Consul and the interface name defined under the ‘cluster-advertise’ flag. Update these options on all hosts participating in the cluster and then make sure you restart the Docker service.

Once Docker is back up and running, we need to deploy the aforementioned key value store for the overlay driver to use. As luck would have it, Consul offers their service as a container. So let’s deploy that container on docker1…

docker run -d -p 8500:8500 -h consul --name consul progrium/consul -server -bootstrap

Once the Consul container is running, we’re all set to start defining overlay networks. Let’s go over to the docker3 host and define an overlay network…

docker network create -d overlay --subnet=10.10.10.0/24 testoverlay

Now if we look at docker1 or docker2, we should see the new overlay defined…

Perfect, so things are working as expected. Let’s now run one of our web containers on the host docker3…

Note: Unlike bridges, overlay networks do not pre-create the required interfaces on the Docker host until they are used by a container. Don’t be surprised if you don’t see these generated the instant you create the network.

docker run -d --net=testoverlay -p 8081:80 --name web1 jonlangemak/docker:webinstance1

Nothing too exciting here. Much like our other examples, we can now access the web server by browsing to the host docker3 on port 8081…

Let’s fire up the same container on docker2 and see what we get…

![]()

So it seems that container names across a user defined overlay can’t be common. This makes sense, so let’s instead load the second web instance on this host…

docker run -d --net=testoverlay -p 8082:80 --name web2 jonlangemak/docker:webinstance2

Once this container is running, let’s test the overlay by pinging web1 from web2…

Very cool. If we look at the physical network between docker2 and docker3 we’ll actually see the VXLAN encapsulated packets traversing the network between the two physical docker hosts…

It should be noted that there isn’t a bridge associated with the overlay itself. However – there is a bridge defined on each host which can be used for mapping ports of the physical host to containers that are a member of an overlay network. For instance, let’s look at the interfaces defined on the host docker3…

Notice that there’s a ‘docker_gwbridge’ bridge defined. If we look at the interfaces of the container itself, we see that it also has two interfaces…

Eth0 is a member of the overlay network, but eth1 is a member of the gateway bridge we saw defined on the host. In the case that you need to expose a port from a container on an overlay network you would need to use the ‘docker_gwbridge’ bridge. However, much like the user defined bridge, you can prevent external access by specifying the ‘—internal’ flag during network creation. This will prevent the container from receiving an additional interface associated with the gateway bridge. This does not however prevent the ‘docker_gwbridge’ from being created on the host.

Since our last example is going to use an internal overlay, let’s delete the web1 and web2 containers as well as the overlay network and rebuild the overlay network using the internal flag…

#Docker2 docker stop web2 docker rm web2 #Docker3 docker stop web1 docker rm web1 docker network rm testoverlay docker network create -d overlay --internal --subnet=10.10.10.0/24 internaloverlay docker run -d --net=internaloverlay --name web1 jonlangemak/docker:webinstance1 #Docker 2 docker run -d --net=internaloverlay --name web2 jonlangemak/docker:webinstance2

So now we have two containers, each with a single interface on the overlay network. Let’s make sure they can talk to each other…

Perfect, the overlay is working. So at this point – our diagram sort of looks like this…

Not very exciting at this point especially considering we have no means to access the web server running on either of these containers from the outside world. To remedy that, why don’t we deploy a load balancer on docker1. To do this we’re going to use HAProxy so our first step will be coming up with a config file. The sample I’m using looks like this…

global

log 127.0.0.1 local0

log 127.0.0.1 local1 notice

defaults

log global

mode http

option httplog

option dontlognull

option forwardfor

option http-server-close

timeout connect 5000

timeout client 50000

timeout server 50000

stats enable

stats auth user:password

stats uri /haproxyStats

frontend all

bind *:80

use_backend webservice

backend webservice

balance roundrobin

option httpclose

option forwardfor

server web1 web1:80 check

server web2 web2:80 check

option httpchk HEAD /index.html HTTP/1.0

For the sake of this test – let’s just focus on the backend section which defines two servers. One called web1 that’s accessible at the address of web1:80 and a second called web2 that’s accessible at the address of web2:80. Save this config file onto your host docker1, in my case, I put it in ‘/root/haproxy/haproxy.cfg’. Then we just fire up the container with this syntax…

docker run -d --net=internaloverlay --name haproxy -p 80:80 -v ~/haproxy:/usr/local/etc/haproxy/ haproxy

After this container kicks off, our topology now looks more like this…

So while the HAProxy container can now talk to both the backend servers, we still can’t talk to it on the frontend. If you recall, we defined the overlay as internal so we need to find another way to access the frontend. This can easily be achieved by connecting the HAProxy container to the native docker0 bridge using the network connect command…

docker network connect bridge haproxy

Once this is done, you should be able to hit the front end of the HAProxy container by hitting the docker1 host on port 80 since that’s the port we exposed.

And with any luck, you should see the HAProxy node load balancing requests between the two web server containers. Note that this also could have been accomplished by leaving the overlay network as an external network. In that case, the port 80 mapping we did with the HAProxy container would have been exposed through the ‘docker_gwbridge’ bridge and we wouldn’t have needed to add a second bridge interface to the container. I did it that way just to show you that you have options.

Bottom line – Lots of new features in the Docker networking space. I’m hoping to get another blog out shortly to discuss other network plugins. Thanks for reading!

Great article!

I’m just preparing installation with 2 physical hosts for HA and overlay network feature is very useful for that.

btw. when running `docker network inspect test overlay` from one of the docker host, I’m getting only list of network members from this particular host, and for sure there other containers on different hosts connected to the network and communication between these two is ok.

Any idea if it it is by design or I’m missing something?

Is the consul container successfully running? If so, does each Docker host have the correct Docker options as I used in the post?

Excellent article. Thanks for taking time to write up.

I contribute to Tradewave and currently we use docker. We had to stitch most of the networking components ourselves, especially around container communication. But we are exploring Kubernetes at the moment.

I am glad to see Docker is putting lot of effort in networking features. Also, its on my todo list to read up on your kubernetes posts.

I am wondering whether should we build on top Docker or just move to Kubernetes. If you’re in SF area, would love to talk more about this.

Unfortunately Im not in the SF area frequently but I am a few times a year. Feel free to reach out via email (on the contact page) if you’d like to chat more about it that way.

Thanks for reading!

Hi,

I’m trying the part under “User defined overlay networks”

I need to connect multiple Azure machines together so that I can have celery workers added to the pool.

I am using docker-compose, however, and am running into a couple of issues. I’ll paste my configs so far and hopefully you can help:

/usr/bin/sudo docker-compose up -d

/usr/bin/sudo docker network create -d overlay –subnet=10.0.1.0/24 workernet

then in the docker-compose.yml file:

consul:

expose:

– “8500”

ports:

– “8500:8500”

image: progrium/consul

build:

context: .

args:

– “-server”

– “-bootstrap”

I assume that my issue is in how to pass the -server and -bootstrap, as I get the following error message:

Error response from daemon: error getting pools config from store: could not get pools config from store: Unexpected response code: 500

I get the same error when I run it from the command line$ sudo docker network create -d overlay –subnet=10.0.1.0/24 workernet

Any guidance would be greatly appreciated, please let me know if you need further info. I’m running in Azure on Ubuntu 16.04. Docker 1.13.0 and docker-compose 1.10.0

Thanks!

I think its probably best to keep compose out of the discussion for now and just focus on the network piece. It sounds like you cant even pass the network create command to a host currently. Is that accurate? If so – can you paste in your Docker service settings? Not sure if you’re on systemd or not.

Hi, sorry, I’m new to docker, so not sure what you mean by “pass to host”. Where would I find service settings? If you mean where I define the services/containers, it’s all in the docker-compose.yml file. Ubuntu 16.04 is indeed systemd.

No worries. What I was getting at is that you can’t even manually create networks. Is that correct?

If so – we need to look at your Docker service settings. Usually part of a systemd drop in file or in /etc/sysconfig/docker

ok, as far as I can determine, the service settings are located in /etc/systemd/system/multi-user.target.wants

lrwxrwxrwx 1 root root 34 Feb 7 15:14 docker.service -> /lib/systemd/system/docker.service

[Unit]

Description=Docker Application Container Engine

Documentation=https://docs.docker.com

After=network.target docker.socket firewalld.service

Requires=docker.socket

[Service]

Type=notify

# the default is not to use systemd for cgroups because the delegate issues still

# exists and systemd currently does not support the cgroup feature set required

# for containers run by docker

ExecStart=/usr/bin/dockerd -H tcp://0.0.0.0:2375 -H fd:// -H unix:///var/run/docker.sock –cluster-store=consul://10.0.1.180:8500/network –cluster-advertise=enp0s3:2375

ExecReload=/bin/kill -s HUP $MAINPID

LimitNOFILE=1048576

# Having non-zero Limit*s causes performance problems due to accounting overhead

# in the kernel. We recommend using cgroups to do container-local accounting.

LimitNPROC=infinity

LimitCORE=infinity

# Uncomment TasksMax if your systemd version supports it.

# Only systemd 226 and above support this version.

TasksMax=infinity

TimeoutStartSec=0

# set delegate yes so that systemd does not reset the cgroups of docker containers

Delegate=yes

# kill only the docker process, not all processes in the cgroup

KillMode=process

[Install]

WantedBy=multi-user.target

Ok – so it looks like you have the right configuration in there. You need to define the ‘–cluster-advertise’ and the ‘–cluster-store’ parameters to tell Docker where to find Consul. Can you confirm Docker is picking up these settings by running a ‘docker info’? You should see the cluster items defined in that output. Do this on all your hosts. Next we need to confirm that consul is reachable. How are you running Consul?

bruce@host:/srv/compose$ sudo docker info

Containers: 7

Running: 5

Paused: 0

Stopped: 2

Images: 5

Server Version: 1.13.0

Storage Driver: aufs

Root Dir: /var/lib/docker/aufs

Backing Filesystem: extfs

Dirs: 79

Dirperm1 Supported: true

Logging Driver: json-file

Cgroup Driver: cgroupfs

Plugins:

Volume: local

Network: bridge host macvlan null overlay

Swarm: inactive

Runtimes: runc

Default Runtime: runc

Init Binary: docker-init

containerd version: 03e5862ec0d8d3b3f750e19fca3ee367e13c090e

runc version: 2f7393a47307a16f8cee44a37b262e8b81021e3e

init version: 949e6fa

Security Options:

apparmor

seccomp

Profile: default

Kernel Version: 4.4.0-62-generic

Operating System: Ubuntu 16.04.1 LTS

OSType: linux

Architecture: x86_64

CPUs: 1

Total Memory: 3.859 GiB

Name: host

ID: MDVA:H3U3:O33A:LFRW:TZFH:N3SI:2THF:4P3K:BKG2:6BDZ:M5CY:B7ZM

Docker Root Dir: /var/lib/docker

Debug Mode (client): false

Debug Mode (server): false

Registry: https://index.docker.io/v1/

WARNING: No swap limit support

Experimental: false

Cluster Store: consul://10.0.1.180:8500/network

Cluster Advertise: 10.0.1.180:2375

Insecure Registries:

127.0.0.0/8

Live Restore Enabled: false

I should also ask, Assuming the actual network my machine is attached to is 10.0.1.0/24, should I ne defining a different network for the overlay? And, Should the cluster-store then be on that network?

Sorry, missed the bit where you asked how I’m running consul. It’s in the docker-compose.yml file. I’ve included the bit at the top, there’s more below but not relevant:

version: “2”

services:

amqp:

expose:

– “5672”

– “15672”

ports:

– “5672:5672”

– “15672:15672”

image: rabbitmq:3.5.1-management

consul:

expose:

– “8500”

ports:

– “8500:8500”

image: progrium/consul

build:

context: .

args:

– “-server”

– “-bootstrap”

i was trying to pass the server and bootstrap bits in, and this is all I could guess how to do it.

Couple of things. Let’s hold off on compose now and focus on getting a overlay network created. Can you start the consul container manually just with docker run? Something like…

docker run -d -p 8500:8500 -h consul –name consul progrium/consul -server -bootstrap

Once that’s running, make sure that the container is running and has a published port (docker port). Then if we get that far, try defining just a simple overlay network…

docker network create -d overlay testoverlay

Ok, yes, after I removed the consul container that was already there, those two commands ran with success.

So you should now see that network on the other nodes when you do a ‘docker network ls’. The next step would be to test running containers on different nodes on that network and seeing if they can reach each other directly.

One would hope. Here’s my question: How do I retrieve info about the network? Do you have any idea why I can’t specify a particular subnet? The reason is that once this makes it into production, I assume I’m going to be needing to hardcode certain things, so I’ll need to be able to specifically define the network to be used.

And to restate: When I create a network in this way, am I trying to actually join the LAN, or am I somehow just making up a new network subnet? IE, if my azure containers are all on 10.6.1.0/24, should I be using the same subnet in my overlay create, or should I maybe use like 192.168.0.0/24?

Thanks

So extend the network command to something like this…

docker network create -d overlay –subnet 172.16.16.0/24 testoverlay

Does that work? To answer your question, the overlay is it’s own network. It should be different from the network your hosts live on. If it isn’t, you could have issues. The overlay is an entirely new network that uses the servers LAN as a transport network. The LAN will never see the IPs from your overlay. Hope that makes sense.

Yes, that makes sense,thanks.

One more question: In my docker.services, this statement:

ExecStart=/usr/bin/dockerd -H tcp://0.0.0.0:2375 -H fd:// -H unix:///var/run/docker.sock –cluster-store=consul://10.0.1.180:8500/network –cluster-advertise=enp0s3:2375

Should the IP of the consul store be on the overlay network or my LAN? If it’s supposed to be on the overlay, how would I determine the IP of the consul container, would that not change each time it’s instantiated?

Should be on the LAN. The docker service itself wont communicate across the overlay. That’s just for containers. So make sure that consul is running on a network that is reachable from the Docker hosts.

Hello my friend,

I made an application using mongo db and R plumber. Build add both containers to bridge as you can see. Now how do I access the application via brower? Before I accessed by making available I am trying to access my solution via browser but I don’t know how to http://127.0.0.1:8000/__swagger__/ and now where to access?

docker network inspect bridge

[

{

“Name”: “bridge”,

“Id”: “5f82c9cbc957bb730f0cdd0f1a791f436c0f53d45dd224f7775dc0f80e1967f6”,

“Created”: “2019-11-18T00:45:24.135178Z”,

“Scope”: “local”,

“Driver”: “bridge”,

“EnableIPv6”: false,

“IPAM”: {

“Driver”: “default”,

“Options”: null,

“Config”: [

{

“Subnet”: “172.17.0.0/16”,

“Gateway”: “172.17.0.1”

}

]

},

“Internal”: false,

“Attachable”: false,

“Ingress”: false,

“ConfigFrom”: {

“Network”: “”

},

“ConfigOnly”: false,

“Containers”: {

“4d2435cf22fd4f14db952433624f115054e8a53a361ddd305190341f77100fa5”: {

“Name”: “r_app”,

“EndpointID”: “a05f6a7e1a6ed3d05e9aa2c4f5c631fbb608527391138a0533025203e0c19144”,

“MacAddress”: “02:42:ac:11:00:03”,

“IPv4Address”: “172.17.0.3/16”,

“IPv6Address”: “”

},

“f0fd581f2e8579462637910047b0c731139f88447120ddae8da4e117776dd101”: {

“Name”: “dbmongo”,

“EndpointID”: “d2269e774fff759b9f79123d4a2e500ca03bcd7d0baa01fe1094735d5b1d3551”,

“MacAddress”: “02:42:ac:11:00:02”,

“IPv4Address”: “172.17.0.2/16”,

“IPv6Address”: “”

}

},

“Options”: {

“com.docker.network.bridge.default_bridge”: “true”,

“com.docker.network.bridge.enable_icc”: “true”,

“com.docker.network.bridge.enable_ip_masquerade”: “true”,

“com.docker.network.bridge.host_binding_ipv4”: “0.0.0.0”,

“com.docker.network.bridge.name”: “docker0”,

“com.docker.network.driver.mtu”: “1500”

},

“Labels”: {}

}

]

Sergio@SergioCarvalho MINGW64 /c/users/sergio/app

$ docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

4d2435cf22fd r-app “/bin/sh -c ‘R -e \”s…” 24 minutes ago Up 24 minutes 8000/tcp, 8787/tcp r_app

f0fd581f2e85 mongo “docker-entrypoint.s…” 59 minutes ago Up 59 minutes 27017/tcp dbmongo

a404c507e55b mongo:latest “docker-entrypoint.s…” 4 hours ago Exited (0) 2 hours ago mongo

Pingback: Docker Container Links - Bitcoin Exchange Script | White Label Exchange 5 min | ICO script Enterprise Blockchain Solution, Services & Consulting Company

Pingback: Docker Container Links – Buy Cpanel/Whm cheap license Nulled 2019

Pingback: Docker Container Links - Onet IDC

Pingback: Docker Container Links - SXI.IO