We’ve talked about docker in a few of my more recent posts but we haven’t really tackled how docker does networking. We know that docker can expose container services through port mapping, but that brings some interesting challenges along with it.

We’ve talked about docker in a few of my more recent posts but we haven’t really tackled how docker does networking. We know that docker can expose container services through port mapping, but that brings some interesting challenges along with it.

As with anything related to networking, our first challenge is to understand the basics. Moreover, to understand what our connectivity options are for the devices we want to connect to the network (docker(containers)). So the goal of this post is going to be to review docker networking defaults. Once we know what our host connectivity options are, we can spread quickly into advanced container networking.

So let’s start with the basics. In this post, I’m going to be working with two docker hosts, docker1 and docker2. They sit on the network like this…

So nothing too complicated here. Two basic hosts with a very basic network configuration. So let’s assume that you’ve installed docker and are running with a default configuration. If you need instructions for the install see this. At this point, all I’ve done is configured a static IP on each host, configured a DNS server, ran a ‘yum update’, and installed docker.

Default Inter-host network communication

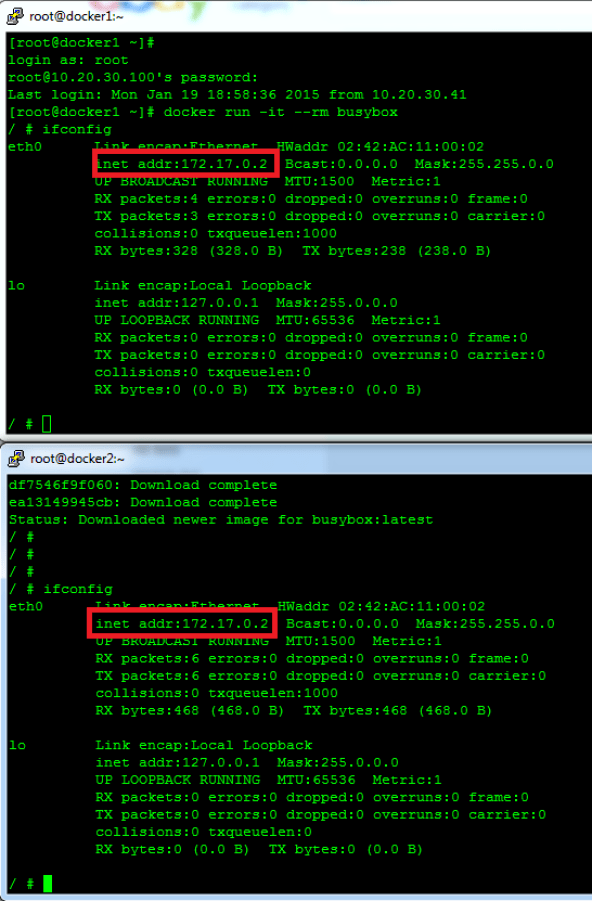

So let’s go ahead and start a container on each docker host and see what we get. We’ll run a copy of the busybox container on each host with this command…

docker run -it --rm busybox

Since we don’t have that container image locally docker will go and pull busybox for us. Once its running it kicks us right into the shell. Then let’s see what IP addresses the containers are using…

So as you can see, both containers on both hosts are using the same IP address. This brings up an important aspect of docker networking. That is, by default, all container networks are hidden from the real network. If we exit our containers and examine the iptables rules on each host we’ll see the following…

There’s a masquerade (hide NAT) rule for all container traffic. This allows all of the containers to talk to the outside world (AKA the real network) but doesn’t allow the outside rule to talk back to the containers. As mentioned earlier, this can be achieved with mapping container ports to ports on the hosts network interface. For example, we can map port 8080 on the host to port 80 on the busybox container with the following run command…

docker run -it –rm –p 8080:80 busybox

If we run that command, we can see that iptables creates an associated NAT rule that forwards traffic destined for 8080 on the host (10.20.30.100 in this case) to port 80 on the container…

So in the default model, if the busybox container on docker1 wants to talk to the busybox container on docker2, it could only do so through an exposed port on the hosts network interface. That being said, our network diagram in this scenario would look like this…

We have 3 very distinct network zones in this diagram. We have the physical network of 10.20.30.0/24 as well as the the networks created by each docker host that are used for container networking. These networks live off of the docker0 bridge interface on each host. By default, this network will always be 172.17.0.0 /16. The docker0 bridge interface itself will always have an IP address of 172.17.42.1. We can see this by checking out the interfaces on the physical docker host…

Here we can see the docker0 bridge, the eth0 interface on the 10.20.30.0/24 network, and the local loopback interface.

Default Intra-host network communication

This scenario is slightly more straight forward. Any containers living on the same docker0 bridge can talk to each other directly via IP. There’s no need for NAT or any other network tricks to allow this communication to occur. There is however one interesting docker enhancement that does apply to this scenario. Container linking seems to often get grouped under the ‘networking’ chapter of docker but it doesn’t really have anything to do with networking at all. Let’s take this scenario for example…

Let’s assume that we want to run both busybox instances on the same docker host. Let’s also assume that these two containers will need to talk to each other in order to deliver a service. I’m not sure if you’ve picked up on this yet, but docker isn’t really designed to provide specific IP address information to a given container (note: there are some ways this can be done but none of them are native to docker that I know of). That leaves us with dynamically allocated IP address space. However, that makes consuming services on one container from another container rather hard to do. This is where container linking comes into play.

To link a container to another container, you simply pass the link attribute in the docker run attribute. For instance, let’s start a busybox container called busybox1…

docker run --name busybox1 -p 8080:8080 -it busybox

This will start a container named busybox1 running the busybox image and mapping port 8080 on the host to port 8080 on the container. Nothing too new here besides the fact that we’re naming the container. Now, let’s start a second container and call it busybox2…

docker run --name busybox2 --link busybox1:busybox1 -it busybox

Note that I included the ‘—link’ command. This is what tells the container busybox2 to ‘link’ to busybox1. Now that busybox2 is loaded, let’s look at what’s going on in the container. First off, we notice that we can ping busybox1 by name…

If we look at the ‘/etc/hosts’ file we see that docker created a host entry with the correct IP address for the busybox1 container on busybox2. Pretty slick huh? Also – if we check the containers ENV variables we see some interesting items there as well…

Interesting, so I now have a bunch of ENV variables that tell me about the port mapping configuration on busybox1. Not only is this pretty awesome, it’s pretty critical when we start thinking about abstracting services in containers. Without this kind of info it would be hard to write application that could make full use of docker and its dynamic nature. There’s a whole lot more info on linking and what ENV variables get created over here at the docker website.

Last but not least I want to talk about the ‘ICC’ flag. I mentioned above that if any two containers live on the same docker host they can be default talk to one another. This is true, but that’s because the default setting for the ICC flag is also set to true. ICC stands for Inter Container Communication. If we set this flag to false, then ONLY containers that are linked can talk to one another and even then ONLY on the ports the container exposes. Let’s look at a quick example…

Note: Changing the ICC flag on a host is done differently based on the OS you’re running docker on. In my case Im running CentOS 6.5.

First off, lets set the ICC flag to false on the host docker1. To do this, edit the file /etc/sysconfig/docker. It should look like this when you first edit it…

Change the ‘other_args’ line to read like this…

Save the file and restart the docker service. In my case, this is done with the command ‘service docker restart’. Once the service comes back up ICC should be disabled and we can continue with the test.

On host docker1 I’m going to download an apache container and run it exposing port 80 on the container to port 8080 on the host…

Here we can see that I started a container called web, mapped the ports, and ran it as a daemon. Now, let’s start a second container called DB and link it to the web container…

Notice that the DB container can’t ping the web container. Let’s try and access the web container on the port it is exposing…

Ahah! So its working as expected. I can only access the linked container on its exposed ports. Like I mentioned though, container linking has very little to do with networking. ICC is as close as we get to actual network policy and that doesn’t have much to do with what actually happens when we link containers.

In my upcoming posts I’m going to cover some of the non-default options for docker networking.

Fascinating read. I haven’t made my way towards Docker yet, but had heard that Docker networking was a different animal. I’m going to have to study Docker use-cases further, because as it stands, it doesn’t seem intended to be a platform for delivering services to consumers outside of the containerized world. Port-mapping seems a painful way to go about it, but I assume the issue is the networking stack is shared by the containers?

Docker “networking” seems more of an infrastructure intended to allow Docker containers to communicate primarily with one another, eschewing traditional network methods. Which is fine. Traditional networking is a confining paradigm if you can get done what you need to without it.

Looking forward to the other posts on this when you get a chance.

Thanks Ethan! I think my interest in docker is driven mostly by the network ‘problem’ it creates. I think there are a couple ways to solve the problem but finding one that makes sense for each particular use case will be the challenge. Service proxy is once that seems to come up frequently but Im not sure if that scales. Certainly coupling docker with a distributed key store gives you quite a few interesting options but it doesnt solve the problem on its own. I think the next few months/years will certainly be interesting ones as container technology continues to develop.

Nice article. Why do you think having distributed DB gives few interesting options ?. Does not Midokura does something similar ?

Thanks for reading! I’m not intimately familiar with Midokura but I’m assuming it uses some sort of distributed key store (I’m also assuming that’s what you mean by distributed DB). I think it’s a foregone conclusion that single points of failure in a network (or anywhere for that matter) aren’t a great idea. What we want is a system that has a scalable control plane that it’s integrated into the data plane. I realize that’s ‘network talk’ but bear with me for a moment. Take for instance CoreOS and etcd. The more nodes I have (compute power (data plane)) the more redundancy I add to the control plane (distributed key store). Etcd can run on each node and it scales along with the compute. There’s no reliance on a central controller (or a couple of controllers) to keep track of state or configuration since it’s distributed between all the nodes participating in etcd. Moreover, the system keeps track of things like master elections, ensuring consensus between the nodes, and adding and removing participants from the distributed key store cluster. It just makes sense to me to have this information stored across the nodes rather than in a central controller or controller cluster.

Does that make sense? I realize that they don’t solve all of the problems but I think it’s certainly a step in the right direction.

Great posts Jon. Minor point, but as for running etcd on all nodes, that is really just for small-ish clusters. More common is to have a dedicated etcd cluster of 3,5,odd# nodes. https://coreos.com/docs/cluster-management/setup/cluster-architectures/ since you don’t want to steal cycles from the app nodes.

But the bigger point is how often it will be updated and the speed that the cluster can converge. More nodes, more frequent changes, the slower slower the convergence. This is going to limit the kinds of changes you can do (i.e. net flows v. config, for example).

Hi Chris! Great point, and one that I didn’t fully clarify. It was my understanding that the etcd nodes in a CoreOS cluster would scale up to a limit. That is, if I chose to deploy the distributed key store across all nodes, the system would realize that there were too many participants and put all but 5 (or so?) on sort of standby. It sounds like thats a part of the etcd 0.4 release -> https://coreos.com/blog/etcd-0.4.0/

Sounds like your suggesting that a dedicated management cluster (that didnt run ‘workload’) is the way to go. While I see the point does that change the deployment model? Part of what I like about CoreOS (and any other system using a distributed key store) is that it just takes care of itself. Any node can replace any other node (for the most part). Would love to hear more of your thoughts on this.

PS – I must confess that I haven’t touched CoreOS in over a month and a half so Im sure Im already behind given how fast moving these projects are!

Great article. A very good primer on docker networking.

It is something that they have missed on their website and this serves as a great resource. I actually found this article through the Docker Weekly digest! Congratulations – you’ve made it! 🙂

I definitely see an evolution of services delivered through micro services thanks to containerisation. The ability to scale out with deployment of new containers and linking (IP or docker link!) is very handy. I see the disruption caused by containers provide the same value that virtual machines did back in 2002.

This definitely allows the notion of fail fast, fail cheap and links it with scale on demand.

Great stuff Jon.

Thanks Anthony! I agree. Containers bring a lot of interesting deployment options along with them. However, I think a lot of the change needed to make containers successful needs to come from the application side of the house. If the applications are written to utilize what docker can offer then we can start thinking differently about how we manage data center compute.

Containers in general bring up a lot of interesting questions for most aspects of the DC. Take the network for example. Do I need the ability to vMotion guests/containers? Maybe, or maybe not. Maybe I can just mark a host down in my container orchestration platform and let the system restart those containers on another node. If the application is designed correctly this could be feasible. Then again, maybe you’re running docker instances on top of your hypervisor. Lots of interesting options and lots of interesting questions to address.

I’d love to hear your thoughts on how you think docker and full blown hypervisors (VMware, KVM, etc) will play together in the DC space.

Totally agree with scale-out aspects that microservices brings to the table. The question is how much networking needs to change when application architecture changes. Todays network are pretty static to application changes. For example, applications like hadoop, Elephant-Mice create add’s lot of stress to the network but still network is remained pretty much same except few tweaks here and there. This does not mean that network is resilient to application models but this is what we have today.

So, do we need different networking model for microservice architecture ?. You have pointed that vMotion(Container motion) may not be required because of the way orchestrating system maintains the state. Are there others that may not be necessary and things that are absolutely necessary ?

My comment on Midokura was to point out that such distributed control plane already existed for VMs.

Lots of good comments and questions. I think ,for the most part, we’re pretty good at building the physical network. The biggest problem these days for physical switching is big data and handling problems like TCP incast correctly (to buffer or not to buffer). If we consider the physical switching and routing as ‘solved’ the real challenge becomes the new edge which is going to be in the virtual space. The intelligence has to be there in my opinion. Much like the old QOS model, mark at the edge and let the core just route. We need to start doing the same for the new virtual edge.

Looks like a typo:

For example, we can map port 8080 on the host to port 8080 on the busybox container with the following run command…

Should be:

For example, we can map port 8080 on the host to port 80 on the busybox container with the following run command…

Updated! Good catch, thanks!

thanks for tutorial,

But fi i have some alias iP routable ( public ).

How can i assigned to each container.

On LXC we could edit config file to make it.

To clarify, you’re interested in having actual routable (RFC 1918) space on the container? Or actual public routed internet space on the container? Either way, you want to be able to map a routed IP onto a container. If that’s the case, are you expecting to have a route for a particular subnet pointing at the docker host which would then route the traffic to the local container?

I have a blog coming up that covers some other docker network options that I think will address this. Either way, give me some clarification so that I make sure I cover your question.

I have dedicated server . And in my server i have 16 iP ( iP failover ). When i start a container , i want to give external access in my container With iP. So With 16 running container , i have 16 differents iP adrress. When i put iP in config.json, i lost configuration after start or stop container.

Can you provide a copy of the config file and how you’re editing/specifying the file to the container? If email is easier you can use the one on the about me page.

We have translated your great article to Chinese.Hope you can add a link to our version,thanks.http://dockerone.com/article/154

Thanks i sent you info. LOok email .

I notice when container start, it’s can’t change iP address l’île for exemple to fix it .

Great article. for the life of me, I been trying for the longest to get my head wrap around the Docker “Networking / Linking” and the way they have it on their website, it just doesn’t convey the basic points to get newbies or sysadmins to understand what is really happening. Looking at each step you just covered on “Linking” just cleared the confusion how this Linking can be used, why it is used and to now understand the networking aspects of Docker. I have read your other “Posts” and you have totally help me understand how these containers are able to communicate. Don’t stop with the visual outlines. Very well done. Thanks

Thanks for the comments Vince! Im glad the articles are helpful to you. Im anxious to get to the Kubernetes networking post but I have a couple more to cover first before I get that far. Thanks for reading!

Pingback: Five Next-Gen Networker Skills - Keeping It Classless

Great article Jon, many thanks. Is this line correct?

docker run –name busybox2 –link busybox1:busybox1 -it busybox

Or should it be?

docker run –name busybox2 –link busybox1:busybox2 -it busybox (or –link busybox2:busybox1)

Cheers

Ignore me, I’ve read the docs now.

I was just replying to your first comment! Glad you sorted it out, it is a little confusing as to why they give you the option to alias the container but I can see a couple possible use cases.

Thanks for reading!

I followed your steps to set other_tag=”-icc=false”

and run docker like that:

web:

docker run -i -t –name web -p 80:80 centos/httpd /bin/bash

db:

docker run -i -t –name db –link web:web centos66/base /bin/bash

but when I run “wget web” in the db container,I got this:

————————————————————————-

bash-4.1# wget 172.17.0.2

–2015-06-17 04:16:31– http://172.17.0.2/

Connecting to 172.17.0.2:80… connected.

HTTP request sent, awaiting response… 403 Forbidden

2015-06-17 04:16:31 ERROR 403: Forbidden.

————————————————————————-

am I wrong with it?

I a greenhand about programing…

I got the answer of my question

Ignore me, sry to bother you.

Great article.

But I meet a problem. I had tested the case in your case in my environment, under the icc=false condition, it worked as your description in my physical Host, but in vagrant created VM(virtual box), i seems strange:

1. icc=true, busybox can ping web and wget worked as expected.

2. icc=false, busybox can not ping web and wget web not worked.

I use the public network for vagrant. on the same subnet of physical host.

Have you encountered this problem before? 🙂

When setting the icc=false flag it seems like a “good” way to enhance network security, especially with no container linking allowed.

In this case a compromised container cannot probe ports on containers on the same bridge (good!). Note with icc=true even “dangling” ports can be probed (other containers were run without -p option).

It would seem logical that the only way to get to a container on the same bridge with icc=false is to use the public IP and public port exposed when the container comes up.

Have any thoughts on if this is more secure?

My hope was to add icc=false and then add IP table entries to “lock down” inter-container communication based on how my micro-services connect. Call me paranoid :^)

Thats an interesting thought. Im just now getting into more of the details on how the containers are actually communicating and it done through VETH pairs and network namespaces. I dont know exactly how the ICC piece is enforced though or what mechanism it uses. I likely wont have much time to play around with it in the immediate term, but I’d love to hear if you make any progress on this!

Pingback: Networking & Docker – The Learning Collective

Pingback: Review - Docker Networking Cookbook - Gestalt IT